JJ Spaun Wife: Behind the Scenes of the Golfer’s Support System

Beyond the Public Eye: Privacy and Respect

Protecting Personal Information in the Digital Age

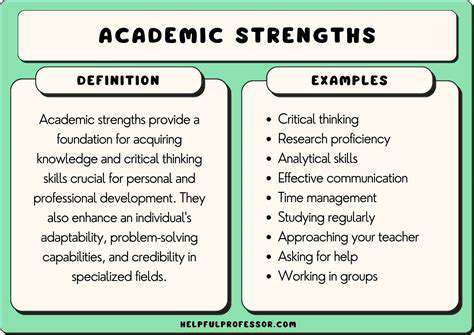

Our digital shadows grow longer each day, often without our conscious realization. Every online interaction leaves breadcrumbs that data brokers eagerly collect and package, creating detailed profiles far beyond what most people would willingly share. This invisible exchange happens constantly - when we use loyalty cards at supermarkets, browse products online, or even just carry our smartphones through shopping malls. The cumulative effect creates startlingly accurate digital doppelgängers that influence everything from our credit scores to job prospects.

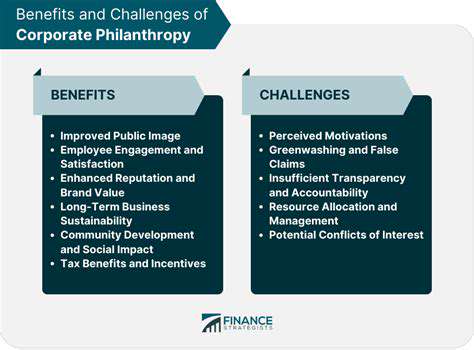

The Role of Data Collection in Modern Businesses

Contemporary commerce operates on a currency of personal data, with companies trading insights about consumer behavior as aggressively as stocks. While personalized recommendations can enhance user experience, the line between helpful customization and invasive profiling grows increasingly blurry. Ethical businesses now face the challenge of leveraging data for improvement without crossing into exploitation, implementing privacy-by-design principles that bake protection into their systems from the ground up.

The Impact of Social Media on Privacy

Platforms designed for connection often become conduits for surveillance, both corporate and personal. The average user shares approximately 1.8 million pieces of personal data annually through social media activities, often without realizing the long-term implications. Location check-ins reveal travel patterns, tagged photos provide facial recognition data, and even casual likes contribute to psychological profiles. This voluntary surrender of privacy creates permanent records that outlast changing attitudes and life circumstances.

Government Regulations and Data Protection

Legal frameworks struggle to keep pace with technological advancement, creating a patchwork of protections across jurisdictions. The GDPR's right to be forgotten represents a significant step forward, but enforcement remains inconsistent globally. Businesses operating internationally now face the complex challenge of complying with sometimes contradictory regulations, while consumers grapple with understanding rights that vary by geographic location. This regulatory unevenness creates vulnerabilities that sophisticated data harvesters can exploit.

Ethical Considerations in Data Analysis

Algorithmic decision-making inherits the biases of its creators, often amplifying societal prejudices under the guise of objectivity. Predictive policing software demonstrates how historical data can perpetuate discrimination when fed into supposedly neutral systems. The data science community increasingly recognizes that fairness must be actively engineered into models, requiring diverse development teams and rigorous bias testing protocols. Without these safeguards, automated systems risk codifying and scaling human prejudices.

Emerging Technologies and Privacy Concerns

Cutting-edge innovations frequently outpace our ability to understand their privacy implications. Facial recognition technology, for instance, can identify individuals in crowds with alarming accuracy, while emotion-detection algorithms claim to interpret feelings from micro-expressions. These capabilities develop faster than societal consensus about their appropriate use, creating tension between technological possibility and ethical boundaries. The lack of clear standards allows questionable applications to become normalized before meaningful oversight can be established.

The Future of Privacy in a Data-Driven World

Tomorrow's privacy landscape will require fundamentally rethinking our relationship with personal data. As quantified self technologies become mainstream, everything from our heart rates to sleep patterns will generate constant data streams. Protecting privacy in this environment demands new paradigms of ownership and control, potentially including personal data vaults with granular sharing permissions. The alternative - a world where every action and reaction is recorded, analyzed, and monetized - threatens to eliminate private thought itself.

Read more about JJ Spaun Wife: Behind the Scenes of the Golfer’s Support System

Hot Recommendations

-

*Indianapolis Weather: Forecast, Severe Conditions & Local Impact

-

*Mike Conley: NBA Veteran’s Leadership, Stats & Latest News

-

*Mary Yoder: Inspiring Career Highlights & Community Impact

-

*Reba McEntire: Country Music Legend’s Latest Releases & Tour Updates

-

*Ball State Women’s Basketball: Season Recap, Key Players & Future Prospects

-

*Ryan Tannehill: NFL Quarterback Profile, Career Stats & Season Outlook

-

*King Charles III: Royal Legacy, Duties & Modern Challenges

-

*Jennifer Tilly: Hollywood Career, Iconic Roles & Latest Updates

-

*F1 Sprint Race Explained: Format, Tips & Championship Impact

-

*Jay Bilas Bracket: College Basketball Insights and Expert Predictions